In Q2 FY2024 earnings call, Satya Nadella offered a compelling proxy for IT-Security leaders around GenAI. Likening it to a comprehensive database of all enterprise documents and communications, but with queries being made in natural language. This analogy is a powerful one, inviting us to consider how GenAI security should be thought about, maybe not very different from traditional data & infrastructure security.

Let’s take a moment to step back and envision what GenAI’s impact can be for businesses, both big and small. Let’s look into some examples from major software companies that illustrate how GenAI has influenced their performance. Consider for e.g. Microsoft, where Azure AI boasts over 53,000 customers. Or GitHub, with more than 50,000 organizations using GitHub Copilot. And, ServiceNow, which has seen a staggering 50%+ improvement in developer productivity by deploying internal tools powered by GenAI. They’ve even introduced a Pro Plus SKU, monetizing it at a 60% premium over their base SKU. These examples underscore a critical point: the importance of leveraging GenAI for all companies aiming to enhance their bottom line and drive top-line growth is undeniable.

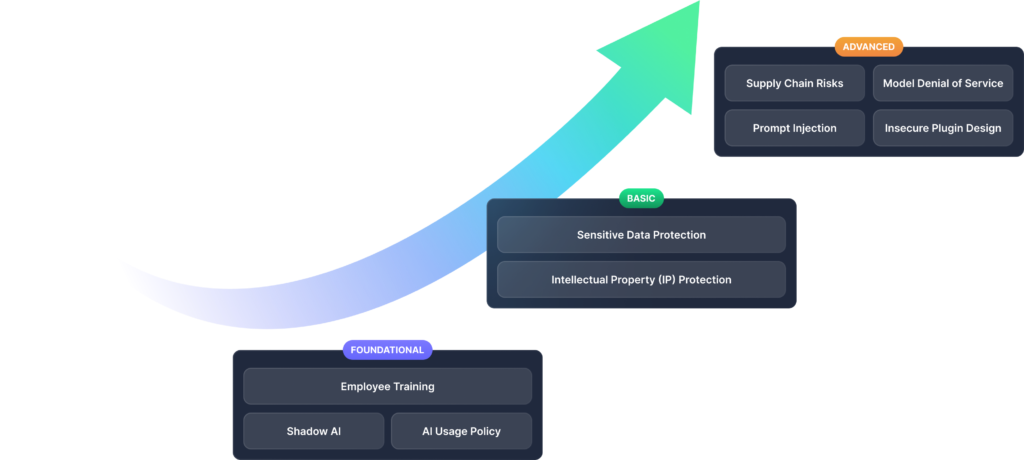

Let’s come back to GenAI adoption concerns from CXOs, a recent Gartner study, surveying across ten industries, highlights the primary adoption challenges for GenAI as shown below. The concerns range from privacy issues to intellectual property loss. Over the past 6–9 months, my discussions with CISOs and CIOs across diverse sectors, from Financial Services to Retail, have revealed that their adoption maturity regarding GenAI typically fall in three distinct phases.

Foundational: This phase is all about the foundations — how can employees and contractors use “Public GenAI” safely and responsibly? Public GenAI refers to tools like ChatGPT, Perplexity, Claude, GitHub Co-Pilot, Bard, and many others.

Basic: The focus here shifts to organizational efficiency, or in simpler terms, reducing costs. In this phase, companies begin deploying internal GenAI-powered tools for customer support, developers, product etc., aiming to boost efficiency.

Advanced: The question here is about value addition — how can GenAI be utilized to increase revenue for my business? A prime example is ServiceNow’s Pro-Plus SKU, thanks to the added value of integrated GenAI.

Implementing GenAI Security should mirror a similar adoption maturity model — we call this GenAI Security Maturity Model. It offers an approach for Infrastructure, Security, and Privacy leaders to securely adopt GenAI in a manner that aligns with their organization’s GenAI readiness and risk profile.

This GenAI Security maturity model has been a cornerstone of my discussions with CIOs and CISOs. Most have found it helpful to conceptualize Securing GenAI. While it’s not exhaustive, it certainly sparks thoughts among CXOs about how to navigate the GenAI landscape securely.

Let’s delve into some of the top risks discussed in the maturity model and some questions IT-Security leaders should consider as you adopt GenAI.

Shadow AI involves AI applications and systems used without formal IT department approval, akin to Shadow IT, posing significant security and compliance risks.

- Which GenAI applications are being utilized by our end-users?

- Are there enterprise applications integrated with GenAI?

- How are we managing and governing sandboxed GenAI usage?

IP Protection is about safeguarding proprietary assets like sensitive financials, customer conversations, source code, and product documents. The incident with Samsung employees inadvertently sharing code with ChatGPT, leading to restricted GenAI access, is a case in point. This concern is echoed by many IP-conscious firms, including JPMC.

Cyberheaven’s analysis of 1.6 million users across various companies revealed about 1,000 IP violations in a week, involving internal data, client data, source code, and project files.

- What are our top 3–5 Intellectual Property categories (e.g., Code, Customer Data, Financial Projections)?

- How are we safeguarding our source code from accidental GenAI uploads?

- What additional security controls are in place for GitHub Co-Pilot usage?

Privacy Protection entails strategies and policies to protect personal information (PII, PCI, PHI) from unauthorized use, access, or disclosure.

- Can we address this through existing educational and process controls

- Are our current DLP solutions adequate, or will they trigger excessive alerts?

- How are we managing DLP risks in User <> GenAI and App <> GenAI interactions?

Prompt Injection represents a sophisticated cybersecurity threat in GenAI, where direct attacks manipulate AI responses through malicious prompts, and indirect attacks subtly bias the output from GenAI.

- Do we have external LLM-Integrated Applications in production?

- What safeguards are in place against Prompt Injection Attacks?

- How are we assessing risks related to Public AI Agents or Plug-Ins?

Supply Chain Risks arise from vulnerabilities in the LLM application lifecycle, including third-party datasets, pre-trained models, and plugins.

- Do we use any internal or external LLM-Integrated Applications?

- What models and datasets have we employed?

- Are there any GenAI Plugins we interact with?

Summary

Satya Nadella provides a simple framework for internalizing GenAI for IT-Security teams across enterprises. In today’s world, becoming AI-enabled isn’t just an option; it’s a necessity. The success stories of forward-thinking companies using GenAI are not just inspiring but also illustrative of the potential that awaits those ready to embark on this journey.

When it comes to securing GenAI, it’s crucial to adhere to a structured approach: begin with the foundational elements, progress to basics, and then advance to more complex strategies.

If you’re interested in a deeper discussion or even in contributing to refining this perspective, I’d be delighted to connect. Feel free to send me a direct message on LinkedIn here. Let’s explore the possibilities of Securing GenAI with a Risk Maturity Framework that’s simple to operationalize.